This will be the first GPU to use stacked, 3D chip packaging and incorporate a new NVLink interconnect tech.

SAN JOSE, CALIF. —Nvidia on Tuesday unveiled a future processor architecture, code named Pascal, that will be the first GPU to use stacked, 3D chip packaging and to incorporate a new PCI Express-based interconnect technology called NVLink.

Pascal, named after the 17th century French inventor and mathematician Blaise Pascal, will increase memory bandwidth, performance, and capacity over Nvidia’s current Kepler GPU architecture and next-generation Maxwell chips coming out later this year.

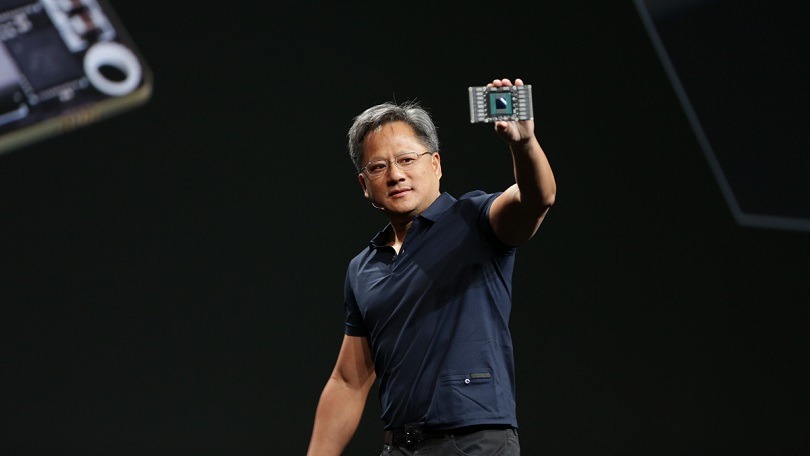

By moving to a 3D chip-on-wafer process with Pascal that will stack GPU chips on top of memory, Nvidia will achieve 2.5 times the capacity and four times the energy efficiency of Maxwell while boosting memory bandwidth for multi-GPU scaling even further, Nvidia CEO Jen-Hsun Huang said.

“We can continue to scale with Moore’s Law thanks to Pascal. Moore’s Law is something some people think will eventually fall off, but it won’t happen with Pascal,” he said during his opening keynote at the GPU Technology Conference here.

Add in a new shared memory architecture and NVLink, a chip-to-chip interconnect based on the PCIe programming model, and the big gains in memory bandwidth Nvidia has sought to counter data bottlenecks become a reality, according to Huang.

“NVLink is the first enabling technology of our next-generation GPU,” he said, noting that the new interconnect will not just allow CPUs and GPUs to share unified memory but also let system builders link up discrete GPUs in the same fashion. The second iteration of NVLink will also add cache coherency between CPUs and GPUs.

Patrick Moorhead, principal analyst for Moor Insights & Strategy, said the NVLink announcement was an important step in the advancement of heterogeneous computing.

“If only your CPU has a high-speed bus, the other processors in the system suffer. This gives GPUs equal footing at the table. Right now, IBM is the only partner in the hopper on NVLink but Nvidia will get more,” he said.

Moorhead also pointed to Nvidia’s move towards 3D chip stacking as a necessary one to keep pushing compute performance forward while minimizing the energy cost.

“If the overall objective is to have faster compute, your memory needs to talk more quickly with your processor and you need more memory. This 3D stacking enables this. You’ll get lower latency, faster performance, and you’ll need less energy to make it work,” the analyst said.

Also on Tuesday, Nvidia introduced its latest monster GPU for the desktop, the GeForce GTX Titan Z, and gave updates on its Nvidia GRID cloud computing initiative and Tegra mobile processor family.